|

VINST/ Visual Diaries |

|

|

|

|

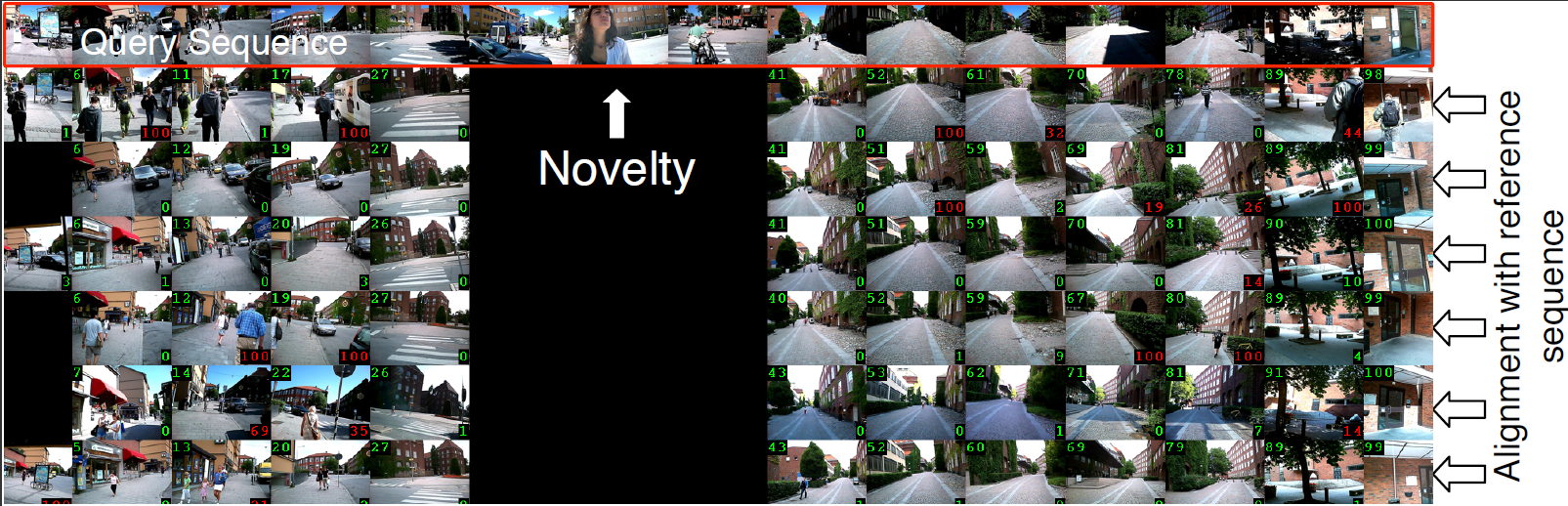

| What Omid saw on his way to work on 3 different days. | ||

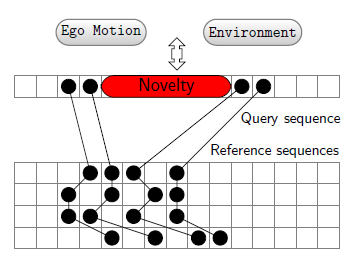

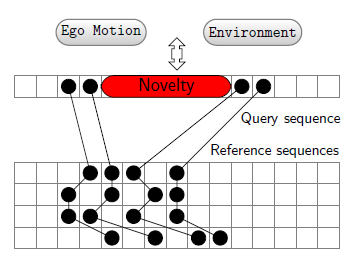

Novel ego motion detection:Ego motion can be affected by many things: the activity which is to be performed, the environment and etc. Considering a fixed activity - walking to work - many factors such as an obstruction on the road, deviation from the normal activity or interacting with objects or people can cause deviations from the repeated ego motion. As our first attempt, we aimed to detect novel ego motions which we planned to use as a simple candidate memory selection process. Such memory selection process will select the memories depicted below from the sample data set depicted above. |

|

|

|

Using such an approach will allow us to highlight temporal segments in videos such as:

|

|

People:

KTH | ||

| Professor | ||

| Stefan Carlsson | ||

| Assistant Professor | ||

| Josephine Sullivan | ||

| PhD Student | ||

| Omid Aghazadeh |

Publications:

|

"Novelty detection from an Egocentric perspective"

Omid Aghazadeh , Josephine Sullivan , Stefan Carlsson . CVPR 2011 PDF Poster |

Resources

- W31 Dataset: This data set consists of 31 videos capturing the visual experience of a subject walking from a metro station to work, subsampled at 1 HZ. It consists of 7236 images in total. Each image is annotated with a location id which covers 9 unique labels in total. Temporal segments corresponding to novel ego motions are annotated as well. Download the data set from here and the annotations from here.