Learning 3D context of Everyday Objects

In this work, we propose that there is a strong correlation between local 3D structure and object placement in everyday scenes. We call this the 3D context of the object. We present our approach to capture the 3D context of different object classes.

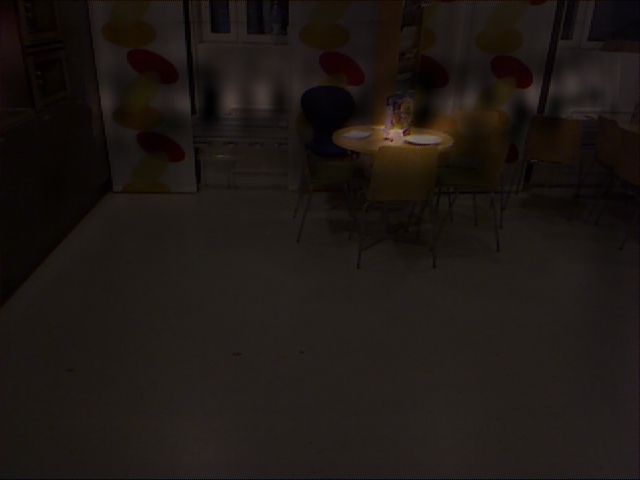

In the left shows an example image where the object cup occupies a very small part of the scene, on the right we see the output from our method where bright areas correspond to high probability of object presence. Most object recognizers would scan the whole image and fail to find the object in this scene. However we can rule out large portions of the image by exploiting the 3D context in which objects appear.

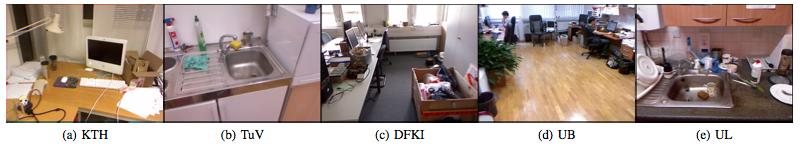

In order to pursue a thorough evaluation, we have collected a RGB-D data set from 5 different work environments in Europe: Technical University of Vienna (TuV), University of Birmingham (UB), Royal Institute of Technology (KTH), German Center for Artificial Intelligence in Saarbrücken (DFKI) and University of Ljubljana (UL). The dataset contains approximately 250,000 Kinect frames.