Active Visual Object Search in Large Unknown Environments

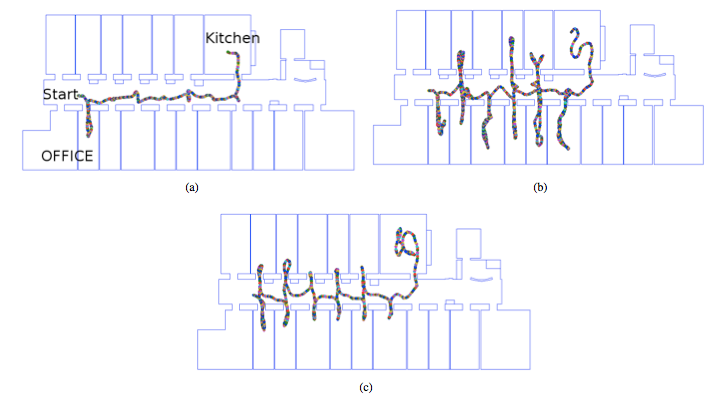

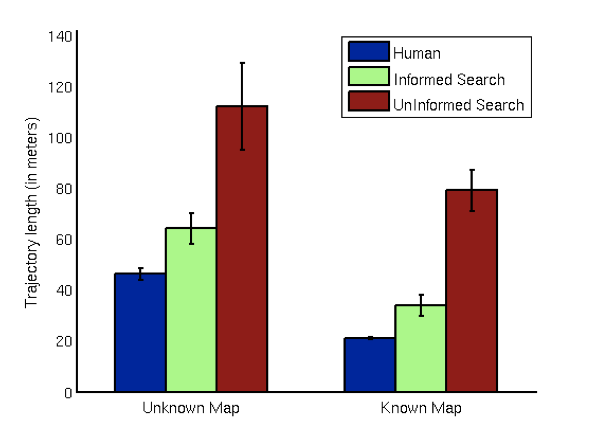

In this work, we present an active visual search system that efficiently searchs for objects in large scale environments by making use of semantics of the environment to limit the search space. We describe our spatial representation fit for this task and utilize semantic information to guide the search. We present a principled planning approach to the visual search problem. We quantitatively compare our method’s results with human search performance. Real world experiments are performed in a floor of an office building, containing 18 rooms, a much larger and more complex environment than found in previous work.