Disclaimer

Last update: 2009-04-16

As a part of ongoing work on time-delayed teleoperation, we implemented an autonomous ball-catching robot. This page is a short introduction to how this was done.

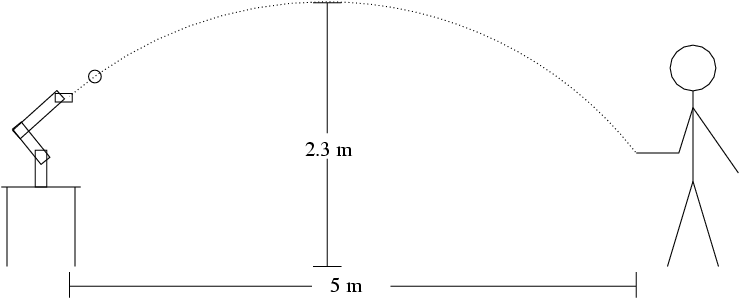

We assume a normal, slow, underhand throw from a distance of approximately 5 m. In an indoor environment, a ball can be thrown with reasonable accuracy along a parabolic path with an apex of 2.3 m, with both the thrower and the catcher situated at a height of approximately 1 m (see figure below). The flight time of the ball will then be approximately 0.8 s, and the ball travels at approximately 6 m/s.

The robot we use can move to any position in its workspace in 0.5 s. This means that we need a vision system that can give an accurate (within 4 cm) prediction of where to move the robot within 0.3 s after the ball has left the thrower's hand.

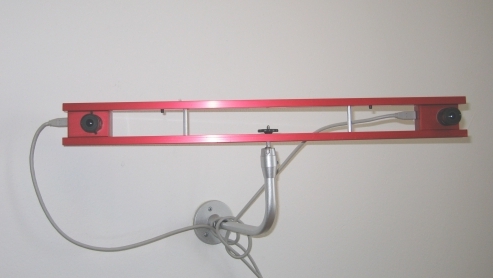

The cameras we use are a wall-mounted 60 cm baseline stereo pair from Videre Design. The cameras connect via Firewire to a Dell Precision 9510 workstation with a Pentium D dual core processor at 2.8 GHz. This setup allows for color images taken at 50 Hz at a resolution of 320x240 pixels. The cameras have a field of view of approximately 50° in the horizontal plane.

The ball was tracked with an extended Kalman filter (EKF).The ball was detected in each image using simple color segmentation. First, the 24 bit RGB image was converted to 24 bit HSV using a lookup table. The ball was found to have a hue value of 3, and a (largely varying) saturation value of approximately 160, so all pixels that were in the range 1--5 for hue and 120--200 for saturation were preliminary marked as ball pixels. A second pass that only kept marked pixels with at least 3 other marked neighbors eliminated noise. The center of mass for the marked pixels was calculated and used as the ball centroid. Since subwindowing schemes have shown to be very efficient to significantly reduce the time needed in segmenting moving objects, one was applied to our implementation as well. After the ball has been detected the first time, only a subwindow where the ball should be expected to be found was processed. This subwindow was calculated using the state estimate from the EKF, and the size of the window is set to cover several times the standard deviation in position. Using this approach, the ball could be segmented and localized with a reasonable accuracy at less than 4 ms processing time per stereo image pair, giving sufficient real-time performance.

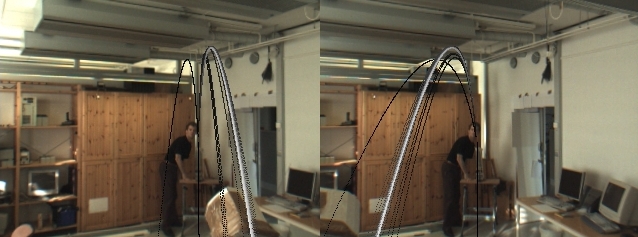

The following image shows a succession of estimated trajectories superimposed on the stereo image. The estimated trajectories are color coded, so that the first estimates are black, the last white, and the others on a grayscale accordingly. The trajectories have converged after 10 images, or 200 ms.

The actual catching position was decided by interpolating the point were the predicted ball trajectory intersects a plane which corresponds to the robot's workspace. This position was then sent via UDP/IP connection to the control computer, that sent the manipulator to the position.

The following clips show the first prototype implementations of autonomous ballcatching. Catch rate is at present approximately 90% for benevolent throws.

The information presented above is presented in detail in the following publications:

The work presented on this webpage is funded in part by the 6th EU Framework Program, FP6-IST-001917, project name Neurobotics.

Disclaimer

Last update: 2009-04-16